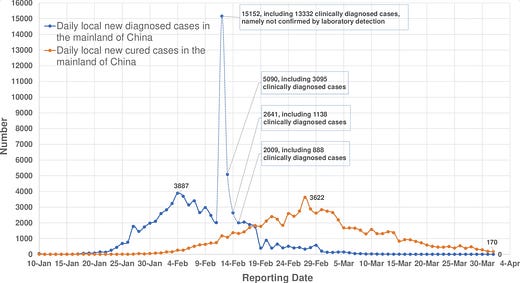

Five years ago, The Rules of Contagion was first published in the UK. But that day, my mind was elsewhere. After seeing the morning COVID-19 data reports, I was busy trying to work out whether we’d made a major error in our analysis of the outbreak. It was 13 February 2020, and China had just reported 15,000 new cases of the disease, a 750% increase on the previous day.

A week earlier, our research group had released some analysis using datasets on infections within China as well as among international travellers. The results suggested that control measures introduced in Wuhan in late January had led to a decline in transmission, and that the outbreak was about to peak in the city. Several media outlets had picked up on this preliminary work, and for a few days, it was looking like we had made the correct call. After rising for weeks, cases finally seemed to be declining.

Then came the spike on 13 February. After almost a month of trying to extract signals from patchy available data sources, working early mornings through into the night, had we missed something crucial? It turned out that apparent surge in China was the result of health authorities changing how cases were defined, to include people with less severe symptoms. Revisiting the data, we decided that on balance, there was still enough evidence to suggest an overall decline in transmission. Still, not everyone agreed. One team in Japan reckoned the epidemic in China would peak sometime between late March and late May, with up to 2.3 million new cases diagnosed in a single day.

In hindsight, the decline in COVID-19 cases in Wuhan looks obvious, just as the later declines in other cities would do. But it was anything but obvious at the time, with researchers around the world working to understand the early – and often contradictory – patterns emerging from Asia. From mid-January onwards, our research group had regular discussions with scientists and health agencies across the region, from mainland China and Japan to Hong Kong and Singapore. We swapped notes on what we knew and what we didn’t, with the latter almost always outweighing the former.

Every year in late February and March, I teach on an MSc course about the spread and control of infectious diseases. As part of the course assessment, students have to run a three-day outbreak investigation. They are told that some people have fallen ill and have to piece together fragments of information – from symptoms to social contacts – to discern what has happened. While the students were analysing this fictional outbreak, our team were working with health agencies, governments and global charities, trying to do the same thing for COVID-19. What did we know about the infection? What were the benefits and downsides of different control measures? Where were the gaps in our knowledge?

Among all the uncertainties, it was clear that life would look different for a considerable period of time.

Or was it clear? One of the things that struck me about that period was the disconnect between what was ‘clear’ in some circles but not others. For example, there seemed to be a gap between knowing the fatality risk of a SARS-CoV-2 infection – which emerging evidence suggested would be around 1% for an uncontrolled outbreak in an older-skewing country like the UK – and knowing the implications of that fatality risk.

All that was required to get the key insight was to multiply two numbers together: the size of an uncontrolled epidemic times 1%. Given that 1% of tens of millions of infections is still hundreds of thousands of deaths, it meant COVID was an extremely serious threat, regardless of specific assumptions about the epidemic dynamics. And yet this specific calculation was featured relatively rarely in public discourse at the time.

As Tom Whipple put it when I later talked to him for a Times article, the point at which the fatality risk became known was crucial:

That was when the academic debates became just that: academic. And it was also when the models, for all their sophistication or otherwise, could be broadly speaking replicated on the back of a fag packet.

This gap, between what is ‘clear’ to one person but not another, was part of the reason I wrote my new book Proof: The Uncertain Science of Certainty. What tools do we need to show something is true? How do we convince others? And, crucially, what happens when these methods fail?

These are some of the hardest and most uncomfortable, but also most important, parts of modern science and evidence. The book has been years in the making, so I hope you’ll find it interesting and useful.

Cover image: Xu et al, 2020