The shallowness of Deep Research

How popular apps produce a non-scientist’s idea of quality science

OpenAI recently released Deep Research, which some have described as ‘an AI system that can conduct comprehensive research and generate fully cited papers in minutes, matching the quality of PhD-level work’. A few days ago, ChatGPT Plus users gained access to Deep Research, getting 10 queries per month (I presume because the model is so much more expensive for them to run). So I decided to test it out.

My colleagues and I have been doing a lot of work recently using Bayesian inference to understand SARS-CoV-2 immunological dynamics, as well as building web dashboards and R packages to explore and analyse data. But one of the things that’s always challenging is compiling the various data sources that have already been published into a standardised format, so they can be compared to our new analysis. (I’ve previously written about how this is a challenge in other areas of epidemic science too, such as with accessing key infection parameters in real-time).

If AI could do this background research for us, it would be extremely valuable. So I gave Deep Research the following prompt:

Collate data on post-vaccination immune responses against the XBB 1.5 SARS-CoV-2 variant and plot antibody kinetics by time since vaccination for all available studies. I'd like neutralizing antibody responses following mRNA vaccination, and include data on age and whether an individual has evidence of prior infection (if reported). Focus on studies from 2022 onwards. Produce machine readable CSV files with the antibody kinetics by time since vaccination for all available studies. Include raw measurements (i.e. columns with 'time', 'biomarker' and 'value', plus columns with any key covariates like 'age' and 'prior infection'). Use your scientific judgement to plot useful summaries. Standardise as much as possible, and include NA for missing values.

(You may wonder if a different prompt would work better, but remember this is a test of what some are calling ‘PhD-level’ system – and good PhD students shouldn’t need instructions like ‘don’t invent data’ and ‘don’t randomly ignore half a table’.)

When it asked further questions on exactly what to extract (e.g. IC50 or IC80 neutralisation titre) I told it again to ‘Use your scientific judgement.’

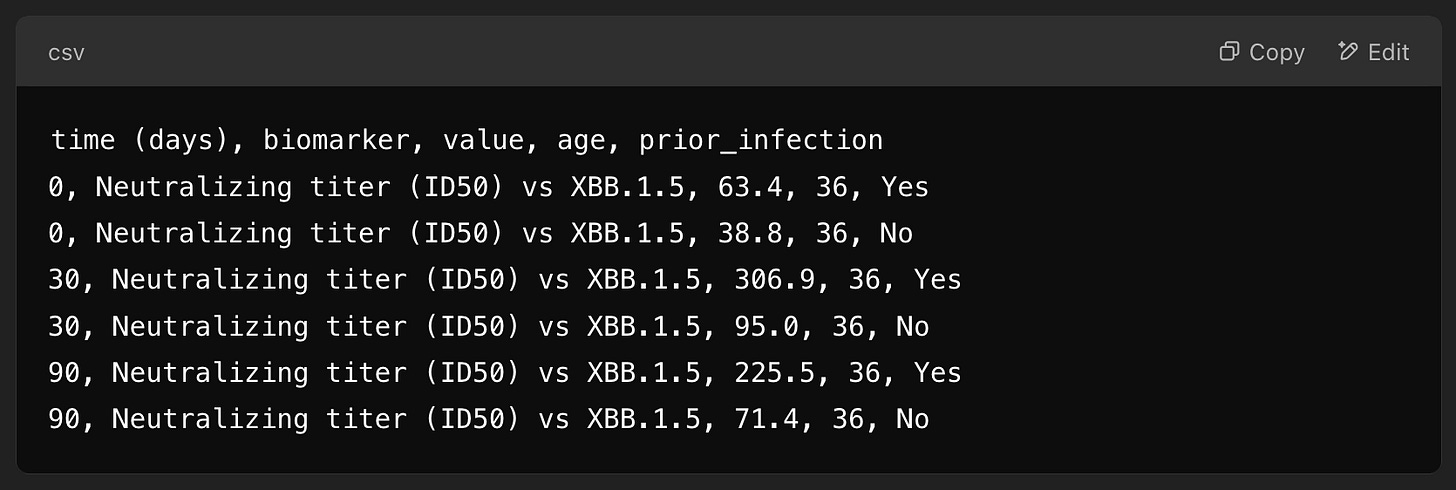

This was the resulting CSV table that Deep Research produced:

Which was somewhat underwhelming, given already knew our paper from last year contains hundreds of measurements on XBB 1.5 responses in the supporting dataset, and would have been an obvious starting point. Deep Research did also produce a long written summary (which you can view here). This was OK as a top-level summary of a few papers, but not really rigorous research quality – and not the correct response to the original problem we were trying to investigate. In practice, I’d be tempted to use a tool like Deep Research to check I haven’t missed anything (i.e. the ‘tell me why I’m wrong’ approach I’ve written about before), but I wouldn’t leave it to come up with original research outputs.

Let’s go back to those claims that Deep Research functions at the level of a PhD student. There are two problems with these claims. First: LLMs can’t independently produce PhD-quality work in general. Or at least, I haven’t seen evidence they can produce work anywhere near the quality or depth of work produced by the PhD students in our group. (There may be some very specific fields and research questions where LLMs are better at mimicking human outputs, but this doesn’t help me and my colleagues – and probably won’t help many other fields either.)

Which brings us on to the second problem. We don’t train PhD students so they can continually produce papers of similar quality to their first one. We help train them so they will get better, and grow as scientists. Even if AI could match early PhD-level output, having an AI ‘scientist’ who needs close supervision and guidance forever is not nearly as useful as non-scientists might assume. As I’ve written about previously, it would just create an unscalable and unmanageable team dynamic.

In recent years, I’ve repeatedly noticed advocates for AI-as-a-scientist presenting solutions that don’t really solve the problems we often face as scientists. LLMs are getting very good at certain tasks, particularly for high-training-data-but-low-variability questions like tidying code bases or writing simple functions. But this is not the same as doing science. It’s similar to the focus on exam success as reflecting ‘peak intelligence’. We don’t learn – or at least, we shouldn’t – merely to be good at exams. We do assessments as part of a learning process, to consolidate knowledge and help us reach the next step in how we eventually use that knowledge.

Against this background of claims about AI scientists in the past couple of years, I’ve seen more and more PhD applications that include project proposals that have been transparently copied and pasted from ChatGPT. This is one of the dangers of telling people AI can produce quality scientific work; when objectively scored, the ChatGPT proposals I’ve seen rank among the weakest. Statements were too generic, ideas unoriginal, and references poorly chosen (and occasionally erroneous). From what I’ve seen of Deep Research versus GPT-4, the proposals would probably score a bit higher, but still not enough to get shortlisted.

If we insist on drawing human comparisons, perhaps it would be more accurate to say that current LLM-based systems are typically operating scientifically at the level of a rather lazy – and ultimately unsuccessful – PhD applicant.

If you’re interested in the scientific search for truth, and how AI is affecting this, my new book Proof: The Uncertain Science of Certainty is available to pre-order now.

Adam, thank you for sharing your experience; I am still trying to put my mind on this issues. Two things that I find good guidance in here:

1. We don’t train PhD students so they can continually produce papers of similar quality to their first one.

2.We help train them so they will get better, and grow as scientists

We do assessments as part of a learning process, to consolidate knowledge and help us reach the next step in how we eventually use that knowledge

As you say, Deep Research is great for the right use cases (checking, vs origination). I'd add low effort exploration of adjacencies, too.

I've written about how the non-obvious implication of this is that it will (/should?!) change *how* we approach seeking answers – moving to a 'who to ask' paradigm, rather than a 'how to answer' one.

The people & orgs that win will be those that manage to incentivise *more* human collaboration, rather than just producing more AI-generated research & analysis.

Would love your thoughts – https://thefuturenormal.substack.com/p/chatgpts-deep-research-and-thinking