When the underlying data doesn't speak for itself

How 'results' about vaccines aren't always quite what they seem

The US Department of Health and Human Services recently circulated a document among politicians to explain its decision to not recommend COVID vaccines during pregnancy. As

has previously summarised, there’s a lot of evidence to support such vaccination: ‘It’s effective. It’s safer than the disease. It helps during pregnancy and the first few months of life,’ she noted.So what is this new evidence leading HHS to not recommend the vaccine? And how well does it hold up to scrutiny?

The citations given in the document aren’t fictional this time, but they do illustrate some common misconceptions about how to read and interpret scientific results. And the traps people can easily fall into when analysing cause-and-effect.

Unadjusted confounders

First, the letter cites a 2023 study as evidence that pregnant women who received a COVID vaccine – particularly early in pregnancy – tend to have higher rates of miscarriage. At first glance, this might seem to be the case, given these numbers were reported in the paper abstract:

Miscarriage occurred at a rate of 3.6 per 10 000 person-days among remotely vaccinated women and 3.2 per 10 000 person-days among those recently vaccinated, in contrast to a rate of 1.9 per 10 000 person-days among unvaccinated women

‘The underlying data speaks for itself,’ said an HHS spokesperson.

Except it doesn’t.

If someone just compares the raw numbers of miscarriages in vaccinated and unvaccinated women, they are missing a crucial source of bias. Specifically, some factors – like pregnancy history and underlying health – may influence both the chances of getting vaccinated and the chances of miscarriage. Just because the two are correlated, it doesn’t mean one is causing the other.

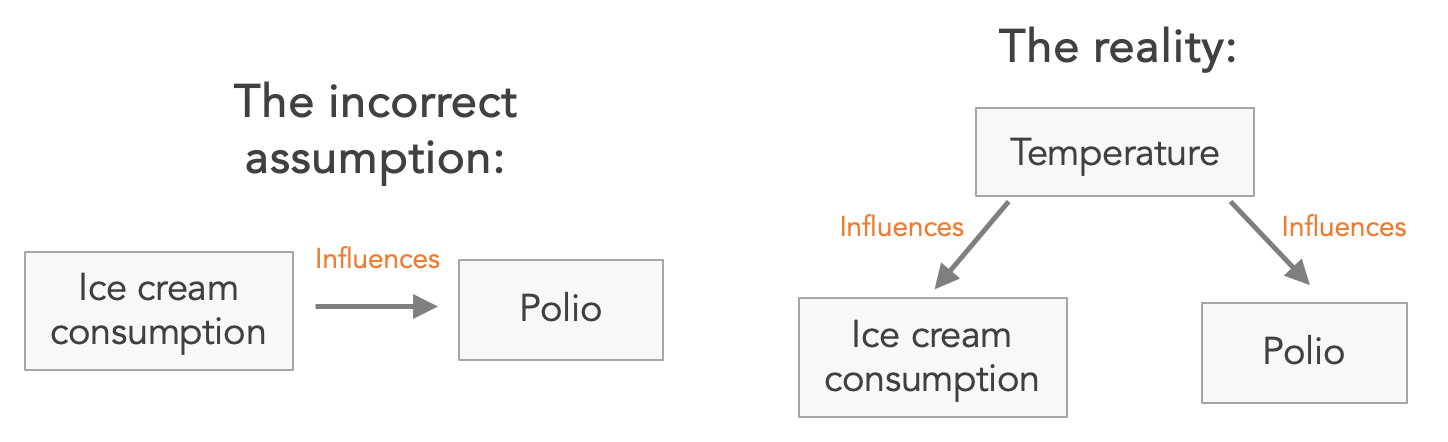

To give a historical example, in the 1940s public health officials noticed that polio cases tended to increase during periods of higher ice cream consumption. They even recommended an ‘anti-polio’ diet that avoided sugary treats. But in reality, it was the underlying temperature that was driving both polio cases and ice cream purchases. Temperature was a confounder, as statisticians would call it.

Similarly, the authors of that 2023 miscarriage study adjusted for the following potential confounders that might separately influence both getting vaccinated and having a miscarriage: age, rurality, neighbourhood income quintile, immigration status, existing health conditions, diagnosed obesity, number of previous births, diagnosed infertility and calendar period for date of conception.

After accounting for these factors, there was no meaningful difference in the risk of miscarriage between vaccinated women and unvaccinated women. For women vaccinated early in pregnancy, the adjusted risk of miscarriage was 2% lower (but this could easily be due to chance, given the 95% confidence interval ranged from a 9% lower risk to 7% higher). For women vaccinated later in pregnancy, the risk was the same as unvaccinated women (95% confidence interval: 7% lower to 8% higher).

Multiple comparisons

A second study cited in the HHS letter is a 2022 paper that looked at pregnancy outcomes in vaccinated and unvaccinated women. Their primary focus was on preterm birth and small size. After adjusting for potential confounders including maternal age, body mass index, previous births, and smoking, they found no evidence that vaccination was linked to worse outcomes:

The rate of preterm birth was 5.5% in the vaccinated group compared to 6.2% in the unvaccinated group (p = 0.31). Likewise, the rates of small for gestational age were comparable between the two groups (6.2% vs. 7.0% respectively, p = 0.2).

However, the study also looked at a number of secondary outcomes, slicing the data in different ways and comparing vaccination with a large number of other measurements, from caesarean delivery to umbilical acidity. One such finding that jumped out was a potential difference in the second trimester:

Women who were vaccinated in the second trimester were more likely to have a preterm birth (8.1% vs. 6.2%, p < 0.001). This association persisted after adjusting for potential confounders (adjusted odds ratio 1.49, 95% CI: 1.11, 2.01).

But remember, the authors looked at a lot of different outcomes. Here’s a table of all the things they considered (noting that these are the raw values that don’t account for confounding):

Just like a roulette player having another spin, the more times we compare things in a dataset, the bigger the chance that we might mistake a random coincidence for a genuine, consistent effect. Just because the 15th spin won that one time, doesn’t mean the 15th spin will always win.

This isn’t the only limitation with the preterm estimate above. The authors also note that, even if the raw correlation is genuine, there is likely to be another factor influencing both vaccine timing and outcome:

there were no preterm births within two weeks after vaccine receipt in the second trimester, and the majority of the preterm births were in the late preterm period, suggesting that unmeasured confounding may have contributed to the results.

In other words, if vaccines were causing preterm births, we’d expect to see some clustering of preterm births shortly after vaccination, especially within the most biologically plausible window (e.g. days or weeks after vaccination). We’d also expect a broader distribution of gestational ages among those births. In contrast, the births observed in the data were mostly late preterm, which tend to be due to underlying health or demographic factors rather than sudden events.

Avoiding predictable data pitfalls

I’ve previously written about how vaccine quirks often aren’t what they seem, and why dynamic health problems can be so counterintuitive. These newly cited studies are no different. Even if some anti-vaccine groups misrepresent data deliberately to push a particular message, others may read the accompanying citations and genuinely wonder whether the raw patterns in the data are something to be concerned about.

The communication challenge is that those raw numbers are often there in the paper, and often in the main summary. But raw numbers don’t necessarily reflect reliable results. Without proper context and adjustment, they can mislead both well-meaning readers and policymakers. In health research, it’s dangerously easy to mistake coincidence for cause, or noise for signal. And that could lead to some dangerously bad decisions.

Cover image: Ted Balmer via Unsplash

If you’re interested in reading more about the assumptions underlying data interpretation, here’s my piece from last year:

We can't avoid models

There’s a simplistic dichotomy that I often see made in science. On one hand, goes the stereotype, we have ‘data-driven’ insights (i.e. pure observations, no models or assumptions involved). And on the other, we have ‘model-driven’ insights (i.e. assumption-laden, theoretical, not data).

Adam, thank you. Of course you are correct here. However, the opposite problem also occurs and it can cause huge problems. That is, researchers sometimes treat all manner of variables as confounders when they are in fact not valid confounders. Such faulty adjustment can cause a real effect to disappear from the results.

All scientists should know that a confounder is not just any variable that reduces the estimated association ("effect size") when it's included in a regression. A valid confounder needs to cause the outcome — formally, at least be independently associated with the outcome — and it must not be caused by the exposure.

Here is a real example. I am omitting key details. A research team observed an increasing rate of diagnoses of a medical condition. They suspected that the increase might be an artifact of a policy change rather than a true increase. So they adjusted for the policy change, comparing the diagnosis rates before and after the policy change. That made the increased diagnosis rate largely disappear. From that they concluded that the increased rate did not reflect reality. (They did not publish the unadjusted data, but they should have.) They did not cite any evidence that the policy changed caused an increasing rate of diagnosis. From then on, that team and their collaborators variously claimed or simply assumed that the observed increased rate of diagnosis was not real. That steered the course of investigation into the condition in the wrong direction.

The analysis that used faulty adjustment simply adjusted for a date (year). Any event that year would have produced exactly the same result. For example, adjustment for the year some of political election would lead to the false conclusion that there was no actual trend in the medical condition.

In sum, studies should show raw, unadjusted results as well as adjusted results, and they should justify the validity of each included confounder.

I've never understood the concept of the data speaking for themselves. Trump speaks for himself and it's complete gobbledegook.